Introduction

For years, quantum computing has been the stuff of science fiction and high-level theoretical physics—a technology promising unimaginable computational power, capable of solving problems that even the most powerful supercomputers would take billions of years to crack. From drug discovery to financial modeling and breaking modern encryption, the potential applications are breathtaking. But the question on everyone’s mind has always been: when will it actually become practical and accessible?

The Promise of Quantum Supremacy (and Beyond)

At its core, quantum computing leverages the peculiar properties of quantum mechanics—superposition and entanglement—to process information in fundamentally new ways. While classical computers use bits (0s or 1s), quantum computers use qubits, which can be 0, 1, or both simultaneously. This allows them to perform vast numbers of calculations in parallel.

We’ve already seen moments of “quantum supremacy” or “quantum advantage,” where experimental quantum processors have solved specific, highly constrained problems faster than classical computers. Google’s Sycamore processor, for example, famously completed a task in minutes that would have taken a classical supercomputer millennia. However, these demonstrations have been largely academic, proving the concept rather than delivering immediate practical utility.

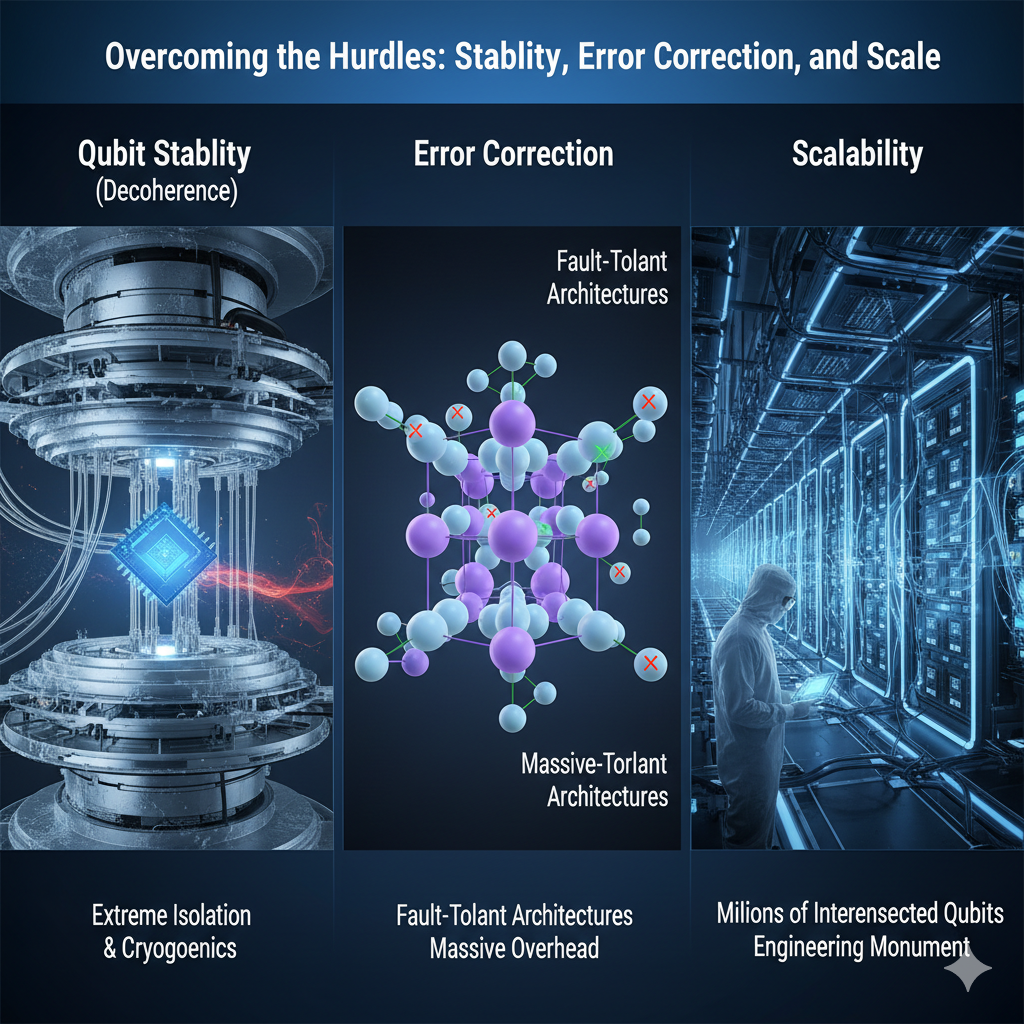

Overcoming the Hurdles: Stability, Error Correction, and Scale

The journey from a laboratory curiosity to a mainstream technology is fraught with significant challenges:

Qubit Stability (Decoherence): Qubits are incredibly fragile and prone to “decoherence,” where they lose their quantum state due to interaction with their environment. Maintaining their delicate quantum state requires extreme isolation—often near absolute zero temperatures—and sophisticated control mechanisms.

Error Correction: Quantum operations are inherently noisy, leading to errors. Building fault-tolerant quantum computers requires massive numbers of physical qubits to encode and protect logical qubits, a feat that is still years, if not decades, away.

Scalability: Current quantum machines house dozens, sometimes hundreds, of qubits. Practical applications will likely require thousands, or even millions, of stable, interconnected qubits. Scaling up while maintaining coherence and connectivity is a monumental engineering challenge.

- Qubit Stability (Decoherence)

- Error Correction

- Scalability

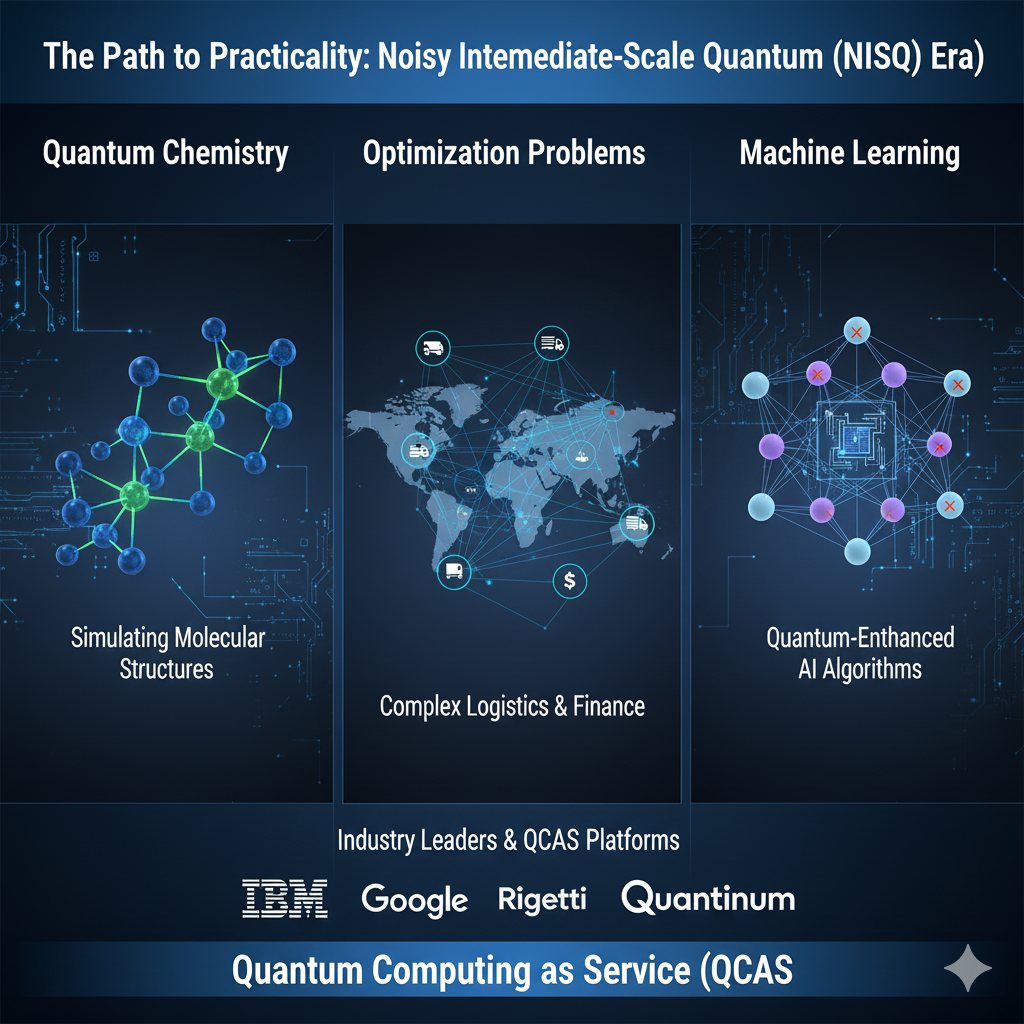

The Path to Practicality: Noisy Intermediate-Scale Quantum (NISQ) Era

We are currently in what researchers call the NISQ (Noisy Intermediate-Scale Quantum) era. During this period, quantum computers have a limited number of qubits and are prone to errors, meaning they can’t yet run the large, error-corrected algorithms needed for many of the most touted applications.

However, the NISQ era is not without its uses. Researchers are exploring algorithms tailored for these smaller, noisier machines for tasks like:

Quantum Chemistry: Simulating molecular structures to design new materials or drugs.

Optimization Problems: Finding optimal solutions for complex logistics, supply chains, or financial portfolios.

Machine Learning: Developing new quantum-enhanced machine learning algorithms.

Companies like IBM, Google, Rigetti, and Quantinuum are making significant strides, not only in building more powerful hardware but also in developing software tools and cloud platforms that make quantum computing more accessible to researchers and developers. Quantum computing as a service (QCaaS) is emerging, allowing users to experiment with quantum processors without needing to build their own.

When Will It Go Mainstream?

The consensus among experts is that fully fault-tolerant, universal quantum computers are still 10-20 years away. However, hybrid classical-quantum approaches and specialized NISQ applications are likely to yield tangible benefits much sooner, perhaps within the next 5-10 years.

The “mainstream” adoption might not look like everyone owning a quantum computer. Instead, it will likely be an invisible infrastructure layer, with quantum accelerators working in concert with classical supercomputers to solve specific, highly complex computational bottlenecks.

The quantum leap isn’t a single jump, but a series of calculated steps. As research continues to chip away at the fundamental challenges, quantum computing will gradually transition from a niche research tool to an indispensable engine driving the next generation of scientific discovery and technological innovation.

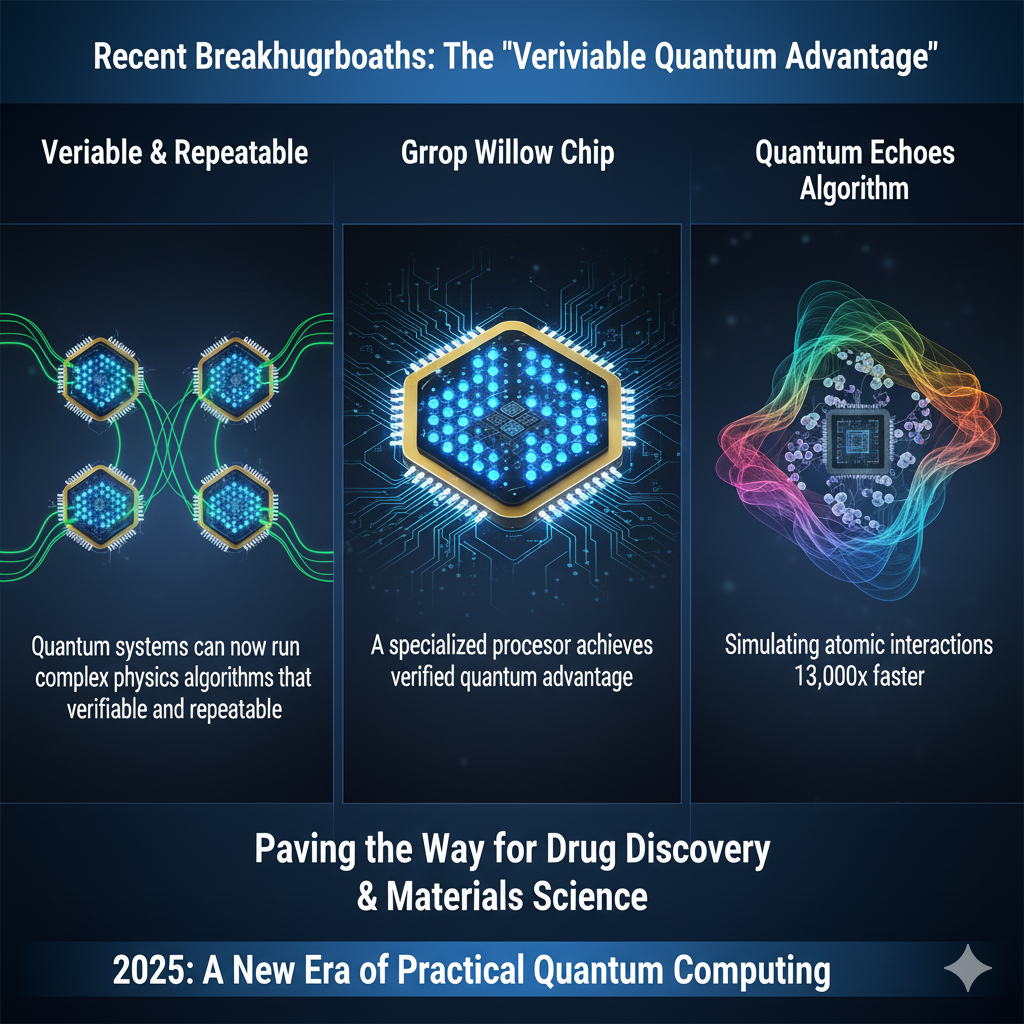

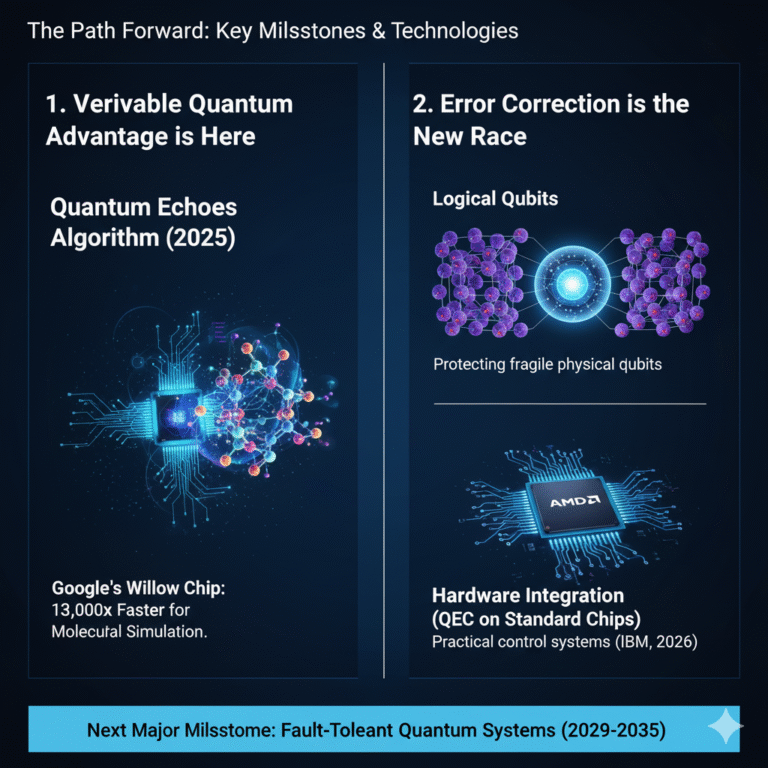

Recent Breakthroughs: The "Verifiable Quantum Advantage

The current year (2025) has seen a shift from theoretical proof-of-concept to verifiable, repeatable demonstrations of quantum advantage, especially in highly specialized tasks.

Google’s Willow Chip: Google recently announced a significant breakthrough using its Willow quantum chip. The system ran a specialized physics algorithm, which they named “Quantum Echoes,” over 13,000 times faster than the best classical algorithm run on one of the world’s fastest supercomputers.

Significance: Unlike earlier “quantum supremacy” announcements, this result is verifiable and repeatable, meaning its outcome can be confirmed by other quantum computers or through experiments. It paves the way for future use in drug discovery and materials science by helping to explain the complex interactions between atoms in a molecule.

AI and Data Science Engineering

- ROshith

IBM's Error Correction on Commodity Hardware

IBM demonstrated a key quantum error correction (QEC) algorithm running in real-time on commonly available AMD chips (Field-Programmable Gate Arrays, or FPGAs).

Significance: This is a crucial step in making quantum computing practical and affordable. It proves that the control and error-correction systems don’t need prohibitively expensive, custom-built hardware, accelerating the integration of quantum processing units (QPUs) with existing classical high-performance computing (HPC) infrastructure.

II. Quantum Error Correction (QEC) Milestones

| Company | QEC Goal/Milestone | Target Year | Details |

| IBM | Fault-Tolerant Quantum Computing (Starling) | 2029 | Outlines a plan to achieve a system with a sufficient number of logical qubits to perform complex calculations reliably. |

| Below-Threshold Error Correction | 2024/2025 | Successfully demonstrated that as more physical qubits are used to encode a single logical qubit, the error rate of the logical qubit decreases, a crucial milestone toward fault tolerance. | |

| Riverlane/Industry Consensus | MegaQuOp System | ~2027 | Expected to be the first error-corrected quantum computer capable of 1 million quantum operations (MegaQuOp), potentially featuring $\sim100$ logical qubits supported by $\sim10,000$ physical qubits. |

III. The Emerging Quantum Ecosystem (Hybrid Computing)

The future of quantum computing is overwhelmingly hybrid, combining the strengths of quantum processors with classical supercomputers.

Quantum-Centric Supercomputing: IBM is pioneering a model that tightly integrates quantum processors with classical computing infrastructure (CPUs/GPUs) via middleware and quantum communication links. This allows for:

Optimized Parallelization: Distributing a single complex problem between the classical and quantum parts for maximum efficiency.

Accelerated Error Control: Using classical resources to quickly run QEC decoding algorithms, which must be executed faster than the quantum errors can accumulate.

Quantum Networks (Quantum Internet): Research into quantum networks is intensifying. While initially focused on Quantum Key Distribution (QKD) for ultra-secure communication, the long-term goal is to interconnect multiple quantum computers. This would create a distributed quantum system, effectively allowing researchers to run larger, more powerful computations than any single machine could handle.

IV. Commercial Focus and Investment

Financial Market Interest: The financial industry is widely anticipated to be one of the earliest adopters of commercially viable quantum computing, particularly for optimization (e.g., portfolio optimization, risk analysis) and simulation (e.g., modeling complex financial markets).

Investment Surge: Over $36 billion in public and private investment has flowed into quantum technology, according to recent outlooks, with a significant portion going into quantum software, compilers, and error correction—underscoring the shift from purely hardware research to building a usable ecosystem.

5 Key Points on the Future of Quantum Computing

1. The Era of Verifiable Quantum Advantage is Here

The goal is no longer just proving that quantum computers can work, but that they can solve a verifiable, real-world relevant problem faster than classical supercomputers. Recent milestones, like Google’s Willow chip running the “Quantum Echoes” algorithm 13,000x faster, confirm this advantage is moving from theoretical possibility to a demonstrable reality for specific, specialized tasks (e.g., molecular simulation).

2. Error Correction is the New Race

The most critical technical challenge is overcoming decoherence and noise. Industry focus has shifted entirely to Quantum Error Correction (QEC). Companies are now demonstrating:

Logical Qubits: Creating stable, protected logical qubits by grouping many noisy physical qubits together.

Hardware Integration: Running QEC algorithms on standard, affordable hardware (like AMD chips, as shown by IBM) to make the control systems practical for scaling. The next major milestone is building a Fault-Tolerant system (predicted 2029-2035).

3. The Future is Hybrid Quantum-Classical

No single machine will do it all. The practical utility of quantum systems will come from Hybrid Computing Architectures. This involves:

Using classical supercomputers (GPUs/CPUs) for data preparation, control, and post-processing.

Using the specialized quantum processor (QPU) only for the computationally hard, quantum-specific part of the problem (e.g., molecular simulation, complex optimization).

This approach, known as Quantum-Centric Supercomputing, is how quantum power will first be delivered to the world.

4. Early Value is in Simulation and Optimization (NISQ Applications)

While universal, fault-tolerant machines are years away, the current generation of NISQ (Noisy Intermediate-Scale Quantum) computers is already being used for:

Quantum Chemistry/Materials Science: Simulating complex molecular interactions to accelerate the discovery of new drugs, catalysts, and high-performance materials.

Optimization: Solving massive optimization problems in finance (portfolio risk analysis) and logistics (route planning) where classical algorithms struggle to find the best possible answer quickly.

5. Quantum-as-a-Service (QCaaS) is Democratizing Access

Quantum computing is not restricted to a few high-tech labs. Major cloud providers (IBM, Amazon, Microsoft) offer Quantum-as-a-Service (QCaaS), allowing researchers, startups, and businesses to access real quantum hardware and powerful simulation tools over the internet. This model is crucial for:

Workforce Development: Training the next generation of quantum software engineers.

Early Experimentation: Allowing companies to begin planning their “quantum strategy” without massive upfront hardware investments.

Conclusion

The Dawn of Practical Quantum Computing

The journey of quantum computing is accelerating, moving decisively from abstract physics to concrete engineering. We are standing at a critical juncture where the foundational challenges—primarily qubit noise and error correction—are being actively tackled with innovative, real-world solutions.

The era of Noisy Intermediate-Scale Quantum (NISQ) computing is rapidly giving way to systems that demonstrate a verifiable quantum advantage in specialized, high-impact areas like molecular simulation and complex optimization.

Key Takeaway

The future of computing will not be purely quantum, but hybrid. The next decade will be defined by the seamless integration of powerful quantum processors into the classical supercomputing stack, accessible to businesses globally via Quantum-as-a-Service (QCaaS) platforms. This ensures that the transformative power of quantum mechanics will be delivered as an indispensable tool, driving breakthroughs in materials science, finance, and medicine, years before fully fault-tolerant universal quantum computers become a reality.

The quantum leap is not a single, distant event, but a continuous process of calculated breakthroughs that are reshaping the computational landscape right now.